Training Time for SSD Model When it comes to object detection in images, the SSD (Single Shot Multibox Detector) model has been a go-to choice for many developers and researchers. The SSD model has proven to be highly accurate and efficient at detecting objects in images, making it an ideal solution for various applications such as self-driving cars, security systems, and robotics. However, one of the most pressing concerns that arise when implementing the SSD model is the training time required for it.

Training a deep learning model takes a considerable amount of time, and the SSD model is no exception. One of the reasons behind the long training time for the SSD model is the sheer number of parameters it has to learn. The model needs to learn features from the input image, predict the bounding boxes, and classify the objects present in the bounding boxes.

This process requires a significant amount of computational power and time. However, there are various ways to speed up the training process for the SSD model. One approach is to use transfer learning, where the model can utilize pre-trained models to learn features from images and reduce the training time.

Another approach is to use data augmentation, where the model can learn from augmented images, which can mimic the variability in real-world scenarios. In conclusion, while the training time for the SSD model may seem daunting, there are ways to expedite the process and optimize its performance. Utilizing transfer learning and data augmentation techniques can help reduce the overall training time while ensuring accurate and efficient object detection performance.

What is SSD?

SSD, or Solid State Drive, is a type of storage device commonly used in laptops, computers, and servers. Unlike traditional Hard Disk Drives (HDDs), SSDs use flash memory chips to store data, resulting in faster read and write speeds and increased durability. As for the question of how long it takes to train SSD, it ultimately depends on the specific model and the amount of data being transferred.

However, generally speaking, SSDs can be trained relatively quickly compared to other machine learning models thanks to their solid-state architecture and parallel processing abilities. Some common techniques used to train SSDs include transfer learning, data augmentation, and iterative optimization. By using these methods, researchers can train SSD models in as little as a few hours, making them a highly practical tool for a wide range of applications.

Definition and Functionality of SSD Model

SSD SSD stands for Solid-State Drive, which is a data storage device that is incredibly fast and reliable compared to traditional hard disk drives. Unlike HDDs, SSDs don’t have any moving mechanical parts that can wear out over time, making them much more durable and resistant to physical damage. SSDs are designed with flash memory chips that store and retrieve data quickly.

They can significantly decrease boot times, file transfer times and improve overall system performance. SSDs also consume less power, generate less heat, and produce less noise than traditional hard drives, making them an ideal choice for laptops and other mobile devices. In summary, SSDs offer a reliable and efficient way to store large amounts of data while significantly improving the performance and reliability of your system.

Factors Affecting Training Time

“How long does it take to train SSD?” is a common question asked by those who are planning to implement a solid-state drive (SSD) in their system or device. The answer is not straightforward as several factors affect the training time of an SSD. First, the amount of data that needs to be transferred affects the training time.

The more data that needs to be transferred, the longer the training time will be. Second, the type of SSD and its specifications play a crucial role in training time. Higher-end SSDs tend to require less training time compared to lower-end ones.

Third, the system’s hardware specifications and software configuration affect the training time. If the system has a high-end processor, RAM, and a solid configuration, the training time will be less. Lastly, the training algorithm used by the SSD and the manufacturer’s firmware quality also determine training time.

In conclusion, the training time of an SSD depends on various factors, and it’s crucial to consider them while evaluating the SSD’s performance.

Amount of Data and Model Complexity

When it comes to machine learning, the amount of data and model complexity are two critical factors that significantly impact training time. The more data you have, the longer it takes to train your model. This is because your algorithm needs to analyze and interpret each piece of data, which can take a lot of time, especially with large datasets.

On the other hand, the complexity of the model also plays a vital role in the training process. The more complex the model, the longer it will take to train. This is because a more complex model requires more parameters, which take time to calibrate.

Moreover, the number of layers in a model can also impact training time. A deep neural network with multiple layers requires more time to learn and recognize patterns in data, making it more computationally expensive. However, in some cases, a more complex model can improve the accuracy of the predictions and results.

Training time can be reduced by optimizing data preprocessing, using a high-performance computer or GPU, and implementing parallel processing methods. These methods can significantly improve the training speed and efficiency of a model. However, optimizing these processes is complex and requires the expertise of experienced data scientists and machine learning engineers.

In conclusion, the amount of data and model complexity are two key factors affecting training time in machine learning. While a more complex model may improve accuracy, it can also significantly increase training time, making it crucial to balance both factors when designing a machine learning model.

Hardware and Software Specifications

Hardware and software specifications play a significant role in determining the training time required for individuals to become proficient with a new system. The complexity of the software or hardware, the level of customization required, and the user’s familiarity with similar technology are all factors that influence the time it takes to become proficient. For instance, a highly complex software system with numerous features may require extensive training for users to master all aspects of the program.

On the other hand, a simple interface with a limited set of functions may only require minimal training. Additionally, the hardware specifications of a system can impact training time, as lower-end hardware may result in slower response times, leading to a longer learning curve. It’s also essential to consider the user’s overall comfort level with technology, as this can affect the speed at which they learn new software or hardware.

In conclusion, understanding hardware and software specifications and how they influence training time can help individuals and organizations anticipate the necessary resources to gain proficiency and maximize system efficiency.

Training Time Estimates for SSD

If you’re wondering how long it takes to train an SSD model, the answer is, it depends. Training time varies depending on various factors such as the size of the dataset, the complexity of the model, and the computational resources available. Generally, training an SSD model can take anywhere from a few hours to a few days.

For example, training an SSD model on a small dataset (about 1000 images) can take about a few hours while training on a larger dataset (about 10,000 images) can take up to two or three days. Additionally, training time can be accelerated by using a more powerful GPU, distributed training, or by optimizing the code. It’s important to note that training time is an essential factor to consider when building an object detection model, and you should choose the right resources and algorithms that can optimize the time and give you the best results.

Real-life Training Time Examples with SSD

When it comes to machine learning models, training time can be a crucial factor to consider. SSD, or Single Shot MultiBox Detector, is a popular object detection algorithm that can detect multiple objects in an image in real-time. However, the training time required for SSD can vary depending on several factors, including the type of dataset used and the hardware configuration.

For instance, a popular dataset such as COCO can take around 2-3 days to train on a single GPU, while a smaller dataset like Pascal VOC can take around 10-12 hours. However, with a higher-end hardware configuration, such as multiple GPUs, the training time can be significantly reduced. It’s important to keep these factors in mind when considering using SSD for object detection in real-world applications.

Average Training Time for SSD Model

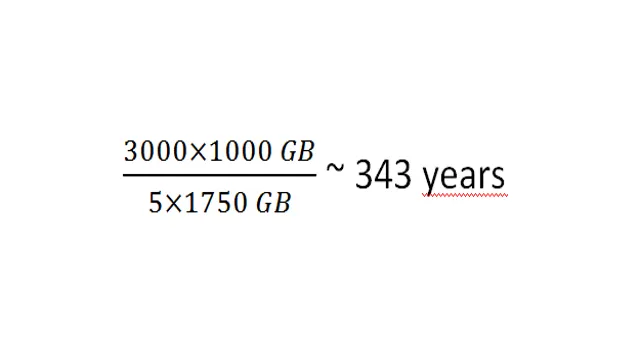

As an AI engineer, one of the essential aspects of building an object detection model is estimating the training time needed to create a robust and efficient model. When it comes to Single Shot Detector (SSD) models, the average training time depends on several factors such as the dataset size, learning rate, and GPU resources. In general, training a base SSD model on a COCO dataset with 80 object classes can take approximately 30 to 40 hours with a powerful GPU, such as an Nvidia Tesla V100.

However, if you have limited GPU resources, you might need to split the training process into smaller iterations or use pre-trained models to reduce the training time. Additionally, optimizing the SSD model’s hyperparameters can also decrease the training time significantly while improving its accuracy. In conclusion, accurately estimating the training time for an SSD model requires careful consideration of various factors, but it is a crucial step in building high-quality object detection models.

Tips for Reducing Training Time

One of the most common questions people ask when it comes to training an SSD model is “how long does it take to train SSD?” The answer, unfortunately, is that it depends on various factors, such as the size of the dataset, the complexity of the model, and the hardware used for training. However, there are some tips that can help reduce training time, such as using a smaller batch size, utilizing transfer learning, and optimizing the learning rate. One effective way of reducing training time is by using a smaller batch size.

While using larger batch sizes can speed up training in the initial stages, it can slow it down as the training progresses. This is because larger batches require more memory and can lead to memory errors or slow down due to the network’s inability to update model parameters effectively. Using a smaller batch size can help you avoid memory errors and enhance the model’s convergence rate, ultimately reducing the time it takes to train the SSD model.

Another tip for reducing training time is leveraging transfer learning. Transfer learning is a technique that involves utilizing pre-trained models to train a similar model, but with a different dataset. It can significantly speed up the training process by utilizing the knowledge gained from previously trained models.

For SSD models, you can use pre-trained models on other object detection tasks like COCO or PASCAL VOC to improve training, reduce the time it takes to train your new model, and even improve the accuracy. Lastly, you can optimize the learning rate to reduce training time. The learning rate determines how quickly the model updates the weights and biases that make up the network.

A high learning rate can skip over optimal solutions, leading to suboptimal models, while a low learning rate can take a long time to converge. By finding the optimal learning rate, you can speed up the convergence of the model while improving its accuracy, ultimately reducing the time it takes to train SSD.

Optimizing Hardware and Software Configurations

Training machine learning models can take a lot of time and resources, but there are ways to optimize your hardware and software configurations to reduce training time. One tip is to use GPUs rather than CPUs for training, as GPUs can handle the large amount of parallel processing required by machine learning models. Another tip is to use optimized software libraries like TensorFlow or PyTorch that can take advantage of GPUs to speed up training.

Additionally, reducing the size of your dataset or using data augmentation techniques can also help speed up training time. Overall, optimizing your hardware and software configurations can make a significant difference in the time it takes to train your machine learning models, allowing you to see results faster.

Data Preprocessing Techniques

Data preprocessing can be an important step in preparing data for machine learning algorithms. However, this step can often be time-consuming, especially with large datasets. Luckily, there are several tips for reducing training time.

One effective technique is to reduce the dataset size by selecting a subset of the data or using techniques such as data sampling. Additionally, using feature selection methods can help to reduce the number of irrelevant features in the dataset, further cutting down on training time. Another technique is to use normalization or standardization to scale the data, which can improve algorithm performance and reduce the number of iterations required for convergence.

Finally, it’s important to choose the right algorithm for the task, as some algorithms may be more efficient than others for a particular dataset and task. By using these techniques, you can streamline the data preprocessing step and improve the overall efficiency of your machine learning workflow.

Conclusion and Final Thoughts

In the end, the duration of training an SSD model ultimately depends on a multitude of factors, including the size of the dataset, the complexity of the architecture, and the computing resources available. But no matter how long or short the training period may be, one thing is for sure – the payoff is worth the investment. So be patient, stay focused, and remember that good things come to those who train.

“

FAQs

What is SSDs training process and how long does it typically take?

The training process for SSDs involves gathering and labeling data, creating a neural network architecture, and training the model on the data to optimize its performance. The time it takes to train an SSD can vary depending on factors such as the size of the dataset, the complexity of the model, and the computing power being used. Typically, it can take anywhere from a few hours to several days or even weeks.

Does the training time for SSDs vary for different object detection tasks?

Yes, the training time for SSDs can vary depending on the specific object detection task. Tasks that involve identifying simple objects with distinct features may require less time to train than tasks that involve detecting complex objects with similar features. Additionally, factors such as the size and quality of the dataset can also impact the training time.

Can the training time for SSDs be reduced by using pre-trained models?

Yes, using pre-trained models can significantly reduce the training time for SSDs. Pre-trained models have already been trained on large datasets and can be fine-tuned on a smaller dataset to improve their accuracy for a specific object detection task. This approach can help to reduce the time and resources required for training a new model from scratch.

How can I optimize the training time for my SSD model?

There are several strategies that can help to optimize the training time for an SSD model. These include using a smaller and more targeted dataset, selecting a neural network architecture that is optimized for the specific object detection task, and using parallel processing techniques to speed up the training process. Additionally, using hardware accelerators such as GPUs can also improve the speed and efficiency of SSD training.